Subscribe to the Interacoustics Academy newsletter for updates and priority access to online events

Training in Corticals (ALR P300 MMN)

-

P300 Evoked Potential: A Deep Dive (1/2)

-

P300 Evoked Potential: A Deep Dive (2/2)

-

ASSR: 95% or 99% Statistical Confidence?

-

Cortical Evaluations Without a Loudspeaker

-

Why use Jumper Cables with the EPA4 Cable Collector?

-

Mismatch Negativity (MMN) Case Study

-

A Deep Dive into Mismatch Negativity (MMN)

-

Auditory Late Response (ALR) in Adults

-

Validation in pediatric hearing aids

-

Aided Cortical Testing: A Complete Guide

-

Getting started: Aided cortical testing

-

Beyond the Basics: Aided cortical testing

-

Aided cortical testing – considerations when testing cochlear implant patients

-

How to interpret aided cortical waveforms

-

How to develop a testing strategy for aided cortical testing

-

What are the options for hearing aid management following aided cortical testing?

-

Aided cortical testing - considerations for unilateral and asymmetrical hearing losses

-

Aided cortical testing – considerations for patients with auditory neuropathy spectrum disorder

-

Perfecting your test technique: Aided cortical testing

-

Aided cortical testing - the clinician's perspective

-

Aided cortical testing - tips and tricks

-

Testing a 4 month old baby using the aided cortical test

-

Aided Cortical Testing in Action - Assessing Aided Benefit with CAEP

Which stimuli should be used for aided cortical testing?

Description

An important consideration when it comes to performing aided cortical testing is around the stimuli used for the test.

Limitations of previous aided cortical stimuli

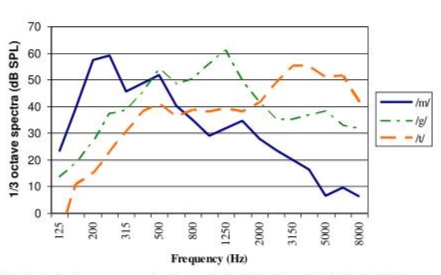

There were concerns regarding some of the previously available aided cortical stimuli regarding the frequency specificity, but also the intensity level delivery relating to how the stimuli were calibrated (Figure 1).

Figure 1: Sources: Dillon, H. et al. (2006), Golding, M. et al. (2006), Golding, M. et al (2007), Golding, M. et al (2006).

It is clear to see the issue regarding frequency specificity here. There is considerable overlap between the different stimuli and this can lead to cortical responses being recorded to stimulation of areas of the basilar membrane that don't correspond with the peak frequency region of the stimulus.

For example, if a high frequency is detected but the patient has much better thresholds in the mid or the low frequencies, this detection could be coming from a different region of the patient's hearing other than the high frequency.

These stimuli were calibrated to a level of 65 dB SPL, which, as we can see, means that all three stimuli were delivered at the same level as each other. However, it is known from looking at the long-term average speech spectrum that this is not how real-life speech works, nor is it how hearing aids are programmed to work.

In reality, the high-frequency components of natural running speech are produced at much softer levels than the mid and the low frequencies. By delivering all three stimuli at the same intensity level, using these original stimuli increased the possibility of cortical responses being recorded even when conversational speech cues are below the listener’s aided hearing threshold, particularly for that critical high-frequency region.

These stimuli were taken from live recordings of actual speech. Each of the stimuli within this set were presented at the same level as each other, and these stimuli come with quite poor frequency specificity.

ManU-IRU stimuli

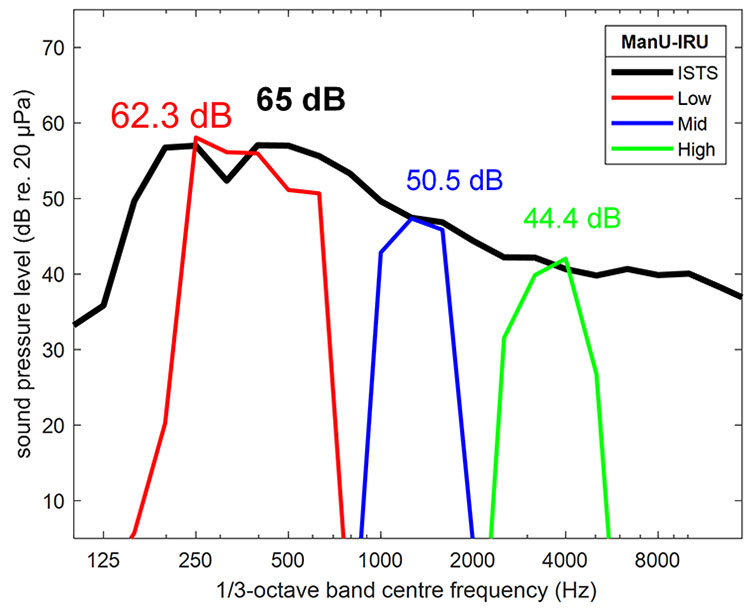

Now there is a new set of stimuli available, which were developed by the University of Manchester and the Interacoustics Research Unit. These are firstly much more frequency specific, with no overlap between them.

They have also been calibrated with reference to the ISTS signal, meaning that each stimulus is presented at the level that corresponds to its frequency band within the ISTS, which is how natural speech works, with the higher frequencies presented at a quieter level than the lower frequencies (Figure 2).

Figure 2: Source: Stone, M. A. et al (2019).

These stimuli were not developed from natural speech, but rather were created synthetically. This allows for the excellent frequency specificity of these stimuli. Such a narrow frequency specificity cannot be obtained from natural speech tokens which stimulate a much wider frequency spectrum and run the risk of measuring cortical responses to stimulation of areas of the basilar membrane that don't correspond with the peak frequency region of the stimulus.

The method of calibration for these stimuli aligns them with the ISTS, which means we are presenting sounds to the patient as they would hear them in the real world. This means we are performing an accurate assessment which is representative of how the patient hears speech via their hearing devices.

HD-Sounds

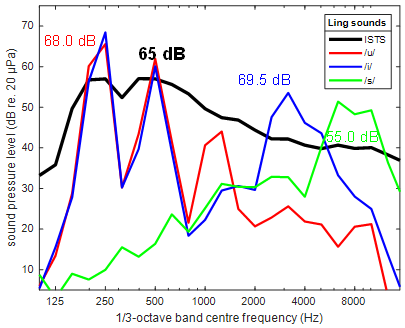

Also available are the HD-Sounds stimuli set. These are updated versions of the original stimuli. There are two options, unfiltered and filtered. Below are the unfiltered stimuli (Figure 3). These have relatively poor frequency specificity with considerable overlap between the three speech tokens.

Figure 3: Sources: Dillon, H. (2023a), Dillon, H. (2023b).

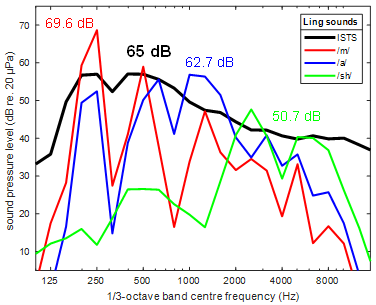

Below are the filtered stimuli (Figure 4). These offer some improvement in the frequency specificity over the unfiltered sounds and both sets have lower presentation levels than the original stimuli as these were designed to align with the ISTS. However, the high frequency is still a bit louder than the ISTS signal.

Figure 4: Sources: Dillon, H. (2023a), Dillon, H. (2023b).

These updated versions of the original stimuli have lower presentation levels than the original stimuli but are still louder than the ISTS. The filtered versions offer some improvement to the frequency specificity.

LING sounds

The LING sounds are also available for aided cortical testing. These LING sounds are shortened versions of the original LING sounds to make them more suitable for evoking cortical responses (Figures 5-6).

Figure 5: Sources: Scollie, S., & Glista, D. (2012)., Scollie, S. et al (2012).

Figure 6: Sources: Scollie, S., & Glista, D. (2012)., Scollie, S. et al (2012).

Note that the presentation levels for the LING sounds are quite high and this is most likely because the sounds were produced in isolation, which might well lead to overly loud levels being produced relative to when the same phonemes appear in natural running speech.

The calibration principle for the LING sounds is that the set of sounds come with a calibration noise which is designed to be reproduced at 60 dB SPL. And once that is in place, all the stimuli are correctly aligned.

Discussion

These stimuli sets differ in the degree of naturalness in terms of how they were created.

However, informal listening to all these short sounds reveals that none of them appear as natural speech when presented, and it is therefore questionable whether it is worth sacrificing other advantages, such as frequency specificity, for alleged naturalness of sound.

The presentation levels also differ. The ManU-IRU stimuli represent the conservative, fail-safe choice, where a detected response guarantees that conversational speech in the given frequency range is audible. The HD-Sounds, and particularly the LING sounds, with the considerably higher presentation levels, carry a risk that detections are obtained even when conversational speech cues are below the aided hearing threshold.

Therefore, the ManU-IRU stimuli are the recommendation from Interacoustics. However, the final choice of stimulus set for aided cortical testing will be up to the preferences of the individual audiologist.

References

Dillon, H. et al. (2006). Automated detection of cortical auditory evoked potentials. [Abstract] The Australian and New Zealand Journal of Audiology, 28 (Suppl.), 20.

Golding, M. et al. (2006). Obligatory Cortical Auditory Evoked Potentials (CAEPs) in infants — a five year review. National Acoustic Laboratories Research & Development Annual Report 2005/2006. Chatswood, NSW, Australia: Australian Hearing, 15 - 19.

Golding, M. et al (2007). The relationship between obligatory cortical auditory evoked potentials (CAEPs) and functional measures in young infants. Journal American Academy Audiology, 18, 117-125.

Golding, M. et al (2006). The effect of stimulus duration and interstimulus interval on cortical responses in infants. Australian and New Zealand Journal of Audiology, 28, 122-136.

Stone, M. A., Visram, A., Harte, J. M., & Munro, K. J. (2019). A Set of Time-and-Frequency-Localized Short-Duration Speech-Like Stimuli for Assessing Hearing-Aid Performance via Cortical Auditory-Evoked Potentials. Trends in Hearing, 23, 233121651988556.

Dillon, H. (2023a). Phoneme levels for use in evoked cortical response measurement [Internal report].

Dillon, H. (2023b). Preparation of speech stimuli for cortical testing [Internal report].

Scollie, S., & Glista, D. (2012). The Ling-6(HL): Instructions.

Scollie, S., Glista, D., Tenhaaf, J., Dunn, A., Malandrino, A., Keene, K., & Folkeard, P. (2012). Stimuli and Normative Data for Detection of Ling-6 Sounds in Hearing Level. American Journal of Audiology, 21(2), 232–241.

Presenter

Get priority access to training

Sign up to the Interacoustics Academy newsletter to be the first to hear about our latest updates and get priority access to our online events.

By signing up, I accept to receive newsletter e-mails from Interacoustics. I can withdraw my consent at any time by using the ‘unsubscribe’-function included in each e-mail.

Click here and read our privacy notice, if you want to know more about how we treat and protect your personal data.